Meta, the parent company of Facebook and Instagram, announced Tuesday a series of new steps aimed at protecting children on its social media platforms.

What You Need To Know

- Meta, the parent company of Facebook and Instagram, announced Tuesday a series of new steps aimed at protecting children on its social media platforms

- The company said it will begin removing more types of content, including about self-harm and eating disorders, from teens’ accounts

- Meta is also automatically placing teen users on Facebook’s and Instagram’s most restrictive content control settings and sending them new notifications encouraging them to update their settings to be more private

- The social media behemoth has faced pressure to better protect children online following allegations that its apps are addictive and contribute to mental health issues for young users

The company said it will begin removing more types of content, including about self-harm and eating disorders, from teens’ accounts. Facebook and Instagram already do not recommend such content in their Reels and Explore sections but are expanding the restriction elsewhere on the apps, even when posted by someone the user follows.

Additional terms related to suicide, self-harm and eating disorders are also being restricted in searches.

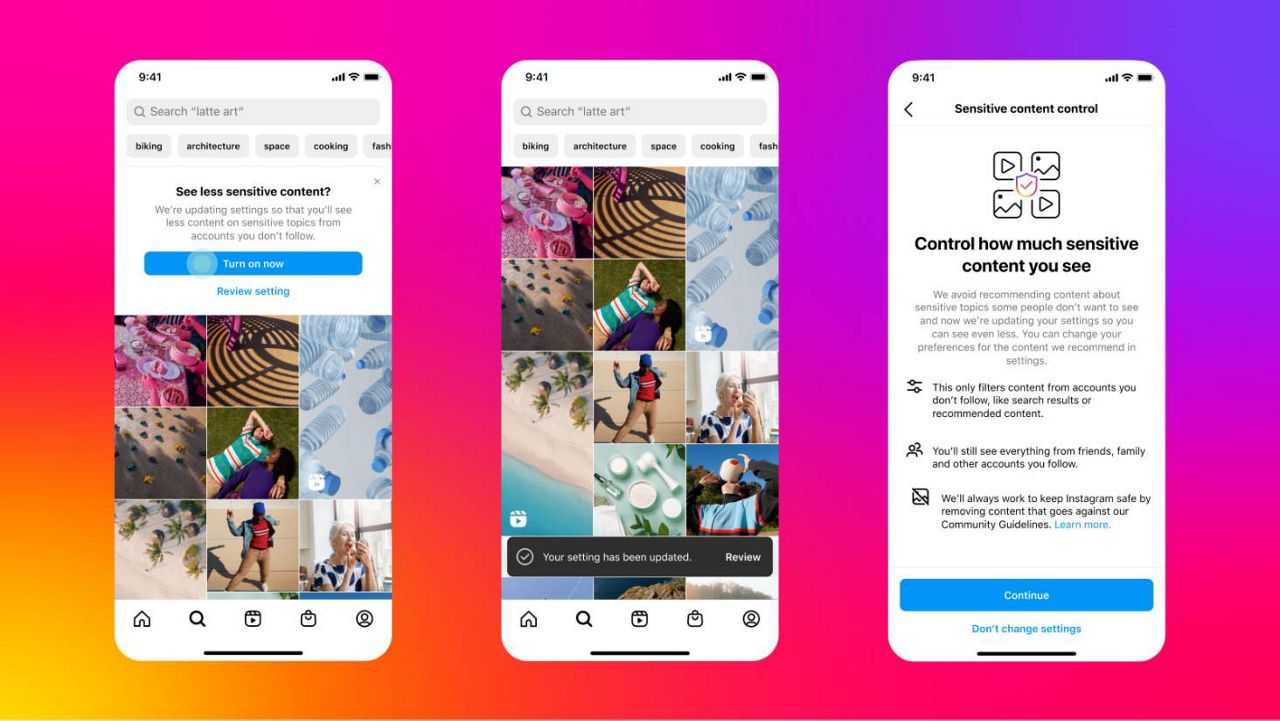

Meta is also automatically placing teen users on Facebook’s and Instagram’s most restrictive content control settings. The platforms have already been doing that for new teen users but are expanding it to teens already using the apps.

In addition, Meta is sending teen users new notifications encouraging them to update their settings to be more private, and they can make that change with a single tap.

Meta said it regularly consults with experts in adolescent development, psychology and mental health to help ensure its young users are viewing age-appropriate content. The company noted it already had introduced more than 30 tools aimed at helping children having safe, positive experiences on its apps.

“Meta is evolving its policies around content that could be more sensitive for teens, which is an important step in making social media platforms spaces where teens can connect and be creative in age-appropriate ways,” Rachel Rodgers, an associate psychology professor at Northeastern University and one expert Meta consulted with, said in a post on the company’s website.

“These policies reflect current understandings and expert guidance regarding teen’s safety and well-being,” Rodgers said. “As these changes unfold, they provide good opportunities for parents to talk with their teens about how to navigate difficult topics.”

Meta said it has begun rolling out the changes, which are expected to be fully in place in the coming months.

The Organization for Social Media Safety, a watchdog group, said in an emailed statement to Spectrum News that it is “perplexed” Meta has been in operation for about 20 years and has not yet been able to stop teens from being exposed to dangerous content.

“We will wait to see what the empirical reduction in harmful content exposure will be, as that is the only metric that matters regarding child safety,” the statement said.

The organization argued that the new default settings can easily be changed and the new policies do not appear to directly address other issues such as cyberbullying, hate speech, drug trafficking and sexual predation.

Meta has faced pressure to better protect children online following allegations that its apps are addictive and contribute to mental health issues for young users.

A handful of bills aimed at protecting children on social media were introduced in Congress last year, but none has gained traction.

In October, attorney generals for 33 states filed a federal lawsuit against Meta, alleging the company designed and deployed addictive features on Instagram and Facebook that negatively affect children and teenagers.

In November, Arturo Béjar, a former Facebook engineer turned whistleblower, testified on Capitol Hill that he warned people at the highest ranks of the company about the dangers its platforms can have on adolescents but that his concerns were ignored.

Meta insisted in a statement after the hearing that it takes protecting its young users seriously and does not prioritize profits over safety.

Note: This article has been updated to include comments from the Organization for Social Media Safety.