Facebook and Instagram are hosting content that promotes the recruitment efforts and glorifies the brutal violence of the Russia-aligned mercenary organization the Wagner Group despite rules established by the social media networks’ parent company, Meta, intended to bar such posts and pages from its platforms, according to a new report.

The Wagner Group has emerged as a major tool of Russian President Vladimir Putin’s war machine in Ukraine and operations across the globe, particularly in Africa. The private army’s role in the war in Ukraine is in question after its leader, Yevgeny Prigozhin, led his private army on a march in June from the front to the outskirts of Moscow in an apparent protest of military leadership’s strategy in the war. For now, it appears Prigozhin and his men are still aligned with Putin and are continuing their focus on Africa.

The organization has been accused of mass execution, torture and rape of civilians in Mali and the Central African Republic by the United Nations and the U.S., and the execution of prisoners of war in Ukraine by the U.N. It also played a key role in Russia’s support of the Bashir al-Assad regime in Syria, bolstering the dictator’s hold on power as he orchestrated massacres and used chemical weapons on his own people.

A spokesperson for Meta confirmed that the Wagner Group is a designated a “dangerous organization” within the company’s moderation policies, meaning it is prohibited from having a presence on its platforms.

But a new report published Thursday by an anti-extremism nonprofit, the Institute for Strategic Dialogue, suggests Meta has failed to follow its own policies. ISD’s analysis shows pro-Wagner content continues to be hosted by Facebook and Instagram, and the Facebook recommendation algorithm is pushing users to join pro-Wagner groups.

“It's content that supports an organization that has been involved in the murder and torture of civilians. There's no ambiguity about how brutal some of these activities are and and then how violent some of the content is as a result,” said Melanie Smith, the head of research for ISD’s U.S. office. “Meta platforms are being used as a way to kind of funnel users towards more graphic content on other platforms. And if there was a more consistent enforcement approach being taken here, we wouldn't see that happening.”

Meta did not comment on the report beyond noting through a spokesperson the Wagner Group “is not permitted to have a presence on our platforms and we regularly remove assets when we identify those with clear ties to the organization.” According to its transparency center, Meta removed 14.5 million pieces of content connected to terrorist groups that violated its "dangerous organizations and individuals" policy in the first quarter of this year.

Over the course of just a few weeks this summer, the report’s authors used the basic tactic of creating a research profile to view — but not like, comment or share — pro-Wagner material on Facebook and Instagram. Between the two platforms, ISD researchers found 114 accounts “that either impersonated or glorified Wagner or posted recruitment content.” Collectively, the posts garnered several hundred thousand users across 13 languages, with some accounts and pages dated back nearly six months.

“We were surprised by the volume of content and also the really quite horrific graphic nature of some of the cases,” Smith said.

Notably, Facebook’s recommendation system pushed additional pro-Wagner content onto the research account, via both direct notifications to the user and through the platform’s “Discover" section, where it recommends groups and pages it believes the user may be interested in.

“This means that not only is Meta failing to detect content supporting the Wagner group on its platforms, but its algorithms may actually be automatically amplifying this content to users,” wrote the report’s authors, Julia Smirnova and Francesca Visser.

Meta’s own policies disallow “organizations or individuals that proclaim a violent mission or are engaged in violence to have a presence” on its platforms. Its “dangerous organizations and individuals” policy has three tiers of enforcement, with the highest tier, “Tier 1,” focusing on “entities that engage in serious offline harms” and include terrorist, hate and criminal organizations.

Praise, substantive support and representation of “Tier 1” groups and their leaders, founders and prominent members are banned on Facebook and should be removed, according to Meta’s own policies. On Thursday, Meta confirmed the Wagner Group belongs in its “Tier 1” designation.

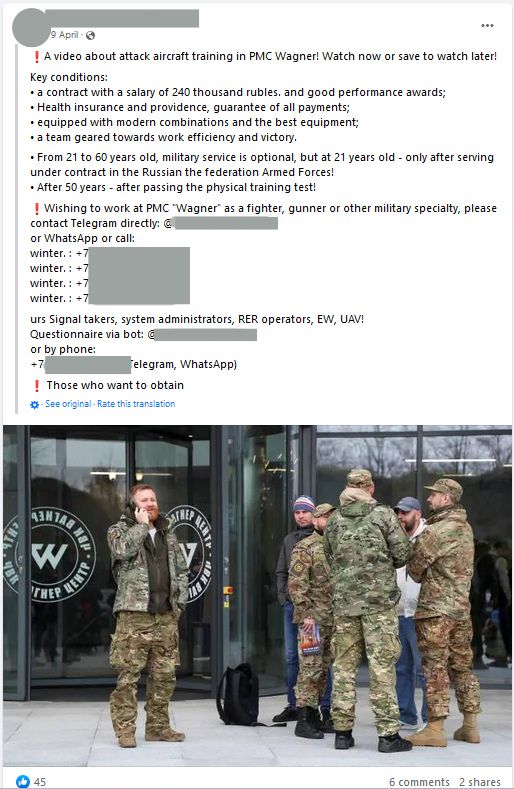

Content observed by the researchers included videos promoting Prigozhin and propaganda encouraging viewers to join the mercenary group, both apparent violations of Meta’s policies. The recruitment posts included “seemingly authentic contact details, such as phone numbers, Telegram bots and channels or links to the Wagner official website.” Though, ISD notes it could not independently link the Facebook pages, groups and profiles to the Wagner Group.

One French-language post from April included in the report advertised a salary of 240,000 rubles — roughly $2,500 — along with “good performance awards,” though it did not note if the salary was weekly, monthly or annually. Health insurance, “the best equipment,” and “a team geared towards work efficiency and victory” were mentioned as perks of the job. Phone numbers and Telegram channels were listed for interested parties.

The accounts examined by ISD posted in English, French, German, Italian, Spanish, Portuguese, Arabic, Macedonian, Polish, Romanian, Indonesian, Vietnamese and Russian. The 15 most popular Facebook pages — with more than 10,000 followers or members each — posted in French, Arabic and Macedonian. Recruitment posts were also found in Russian, Spanish and Romanian.

Two of the largest pages posted in French to a combined audience of over 177,000 followers, specifically targeting recruits for Wagner Group activities in Mali and Côte d'Ivoire, former French colonies where the language is still widely used.

In Ukrainian groups, the pro-Wagner pages posted messages urging Ukrianians to “provide information about Nazis,” which the researchers note is a pro-Kremlin phrase for pro-Ukrianian forces.

And on Instagram, accounts examined by ISD posted recruitment videos that included testimonials from Wagner soldiers in the battle-torn Ukrainian city of Bakhmut, the site of Europe’s bloodiest urban combat since World War II.

“In the video, the soldier reveals that he had no prior military experience and had not served in the army before joining the group,” the report’s authors write. “He passionately describes the group as a ‘big family’ and assures prospective recruits that they will receive thorough training and a warm welcome upon joining.”

Another video shared on the pages depicted a Wagner fighter posing with dozens of dead bodies, allegedly Ukrainians killed in Bakhmut.

Even more included combat footage from Ukraine, the Central African Republic and Syria, often featuring superimposed symbols of sledgehammers, a reference to the 2017 videotaped torture and execution of a Syrian Army deserter allegedly at the hands of Wagner Group members. The sledgehammer, used to beat the deserter to death, has become a rallying cry for the Wagner Group and its supporters.

Videos apparently showing Wagner Group soldiers executing enemy combatants in Ukraine have surfaced at least twice since the invasion last year, according to ABC News.

“This is content that is supporting what Meta itself has called a ‘dangerous organization.’ So it kind of begs the question as to why these policies would exist in the first place if they're not willing to take a comprehensive enforcement approach to them?” said Smith. “This content was easy to find. This wasn't an investigation that we ran over months and used hundreds of different keywords. This was really simple searches. So it does kind of bring us to the question of, how has this not been done internally?”

Meta’s content moderation systems have long been the subject of criticism. Amnesty International said last year the company’s algorithms “substantially contributed to the atrocities perpetrated by the Myanmar military against the Rohingya people,” a minority group in the country. United Nations officials have called for Myanmar generals to be tried for genocide and implicated Facebook for inciting violence.

And in June, Meta launched a taskforce in response to a Stanford University report that identified Instagram’s content recommendation algorithms as playing a key role in promoting accounts offering child sexual abuse materials.

The company is also facing several lawsuits from current and former members of its content moderation teams for inadequately supporting their efforts.

“We're trained to do this kind of thing, and we receive resources as a result, but the average person going about their day on Facebook and Instagram, looking up news related to the war in Ukraine, you really should be protected from this kind of content,” Smith said, noting ISD has procedures in place that include mandatory trauma counseling to help its researchers handle the graphic content they consume. “The potential for them to come across it and be even more disturbed is something that I feel really passionately should not be happening.”