The federal government will soon have invested half a billion dollars in 25 national artificial intelligence institutes, intended to back “responsible innovation that advances the public good,” according to plans announced by the White House Thursday.

The federal government will invest $140 million to fund seven new artificial intelligence research centers as part of the Biden-Harris administration’s plans to promote “responsible American innovation in artificial intelligence,” with goals of protecting individual rights and safety. The White House’s Office of Management and Budget will also soon issue policy guidance on the use of AI by the federal government, a White House official said, speaking with reporters on background.

“As is true of all technologies, we know there are some serious risks. As President Biden has underscored, in order to seize the benefits of AI, we need to start by mitigating its risks,” a White House official told reporters on background. Doing so, the official said, will provide a basis for effective responses to stronger future technology.

Those national institutes are tasked with promoting responsible innovation, building up the country’s research and development infrastructure and supporting a diverse AI workforce.

Risk mitigation responsibility, officials said, is shared between government direction and the tech innovators building AI products.

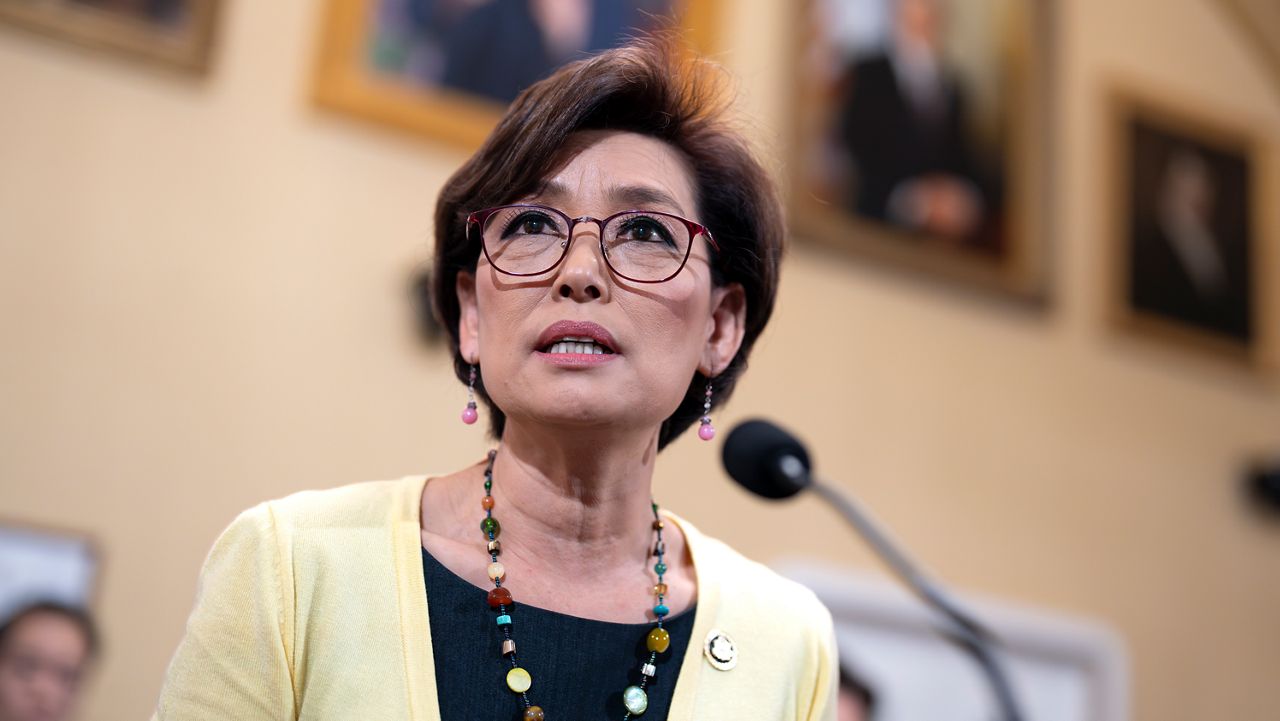

To wit, Vice President Kamala Harris met Thursday with the CEOs of four American companies leading the field in AI development: Google parent company Alphabet; AI developer Anthropic, which is rooted in AI safety; ChatGPT developer OpenAI; and Microsoft.

"Advances in technology have always presented opportunities and risks, and generative AI is no different," Harris said in a statement after the meeting. "AI is one of today’s most powerful technologies, with the potential to improve people’s lives and tackle some of society’s biggest challenges. At the same time, AI has the potential to dramatically increase threats to safety and security, infringe civil rights and privacy, and erode public trust and faith in democracy."

The vice president called the challenges posed by advancements in technology "complex," recalling her time as California's attorney general, working on cases related to online scams, data breaches and harassment, and probe of Russian interference in the 2016 election as a U.S. Senator.

"Through this work, it was evident that advances in technology, including the challenges posed by AI are complex," she wrote. "Government, private companies, and others in society must tackle these challenges together. President Biden and I are committed to doing our part – including by advancing potential new regulations and supporting new legislation – so that everyone can safely benefit from technological innovations."

"As I shared today with CEOs of companies at the forefront of American AI innovation, the private sector has an ethical, moral, and legal responsibility to ensure the safety and security of their products," Harris concluded. "And every company must comply with existing laws to protect the American people. I look forward to the follow through and follow up in the weeks to come."

The discussion, officials said, sought to “underscore the importance” of tech’s role in mitigating risks, while also seeking to draw companies together to protect the public in a way that allows all to benefit from AI innovation.

“We do think that these companies have an important responsibility and many of them have spoken to their responsibilities,” the official said. “Part of what we want to do is make sure we have a conversation about how they’re going to fulfill those pledges.”

Officials also announced that a handful of leading AI developers, including Anthropic, Google, Hugging Face, Microsoft, NVIDIA, OpenAI and Stability AI, will allow their models to be publicly evaluated at DEF CON 31, the world’s largest and longest-running hacker convention.

The White House has made the future of AI a priority, last year publishing its “Blueprint for an AI Bill of Rights.”

That blueprint makes priorities of algorithmic discrimination protection, data privacy and human alternatives to using AI chatbots to solve problems.

While the Biden-Harris administration seeks to better set up protections for the public, federal agencies have moved to implement some AI models and applications in their everyday duties.

According to Government Executive, a news site focused largely on federal government employees and trends, the Office of Personnel Management is using chatbots to screen and triage requests for help requests from federal employees and retirees, and may not stop there, with plans to use AI tools in rewriting job descriptions.